1、安装要求(提前确认)

在开始之前,部署Kubernetes集群机器需要满足以下几个条件:

- 三台机器,操作系统 CentOS7.5+(mini)

- 硬件配置:2GBRAM,2个CPU,硬盘30GB

2、安装步骤

| 角色 | IP |

|---|---|

master |

192.168.50.128 |

node1 |

192.168.50.131 |

node2 |

192.168.50.132 |

2.1、安装前预处理操作

注意本小节这7个步骤中,在所有的节点(master和node节点)都要操作。

(1)关闭防火墙、selinux

~]# systemctl disable --now firewalld

~]# setenforce 0

~]# sed -i 's/enforcing/disabled/' /etc/selinux/config

(3)关闭swap分区

~]# swapoff -a

~]# sed -i.bak 's/^.*centos-swap/#&/g' /etc/fstab

上面的是临时关闭,当然也可以永久关闭,即在/etc/fstab文件中将swap挂载所在的行注释掉即可。

(4)设置主机名

master主节点设置如下

~]# hostnamectl set-hostname master

node1从节点设置如下

~]# hostnamectl set-hostname node1

node2从节点设置如下

~]# hostnamectl set-hostname node2

执行bash命令以加载新设置的主机名

(5)添加hosts解析

~]# cat >>/etc/hosts <<EOF

192.168.50.128 master

192.168.50.131 node1

192.168.50.132 node2

EOF

(6)打开ipv6流量转发。

~]# cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

~]# sysctl --system #立即生效

(7)配置yum源

所有的节点均采用阿里云官网的base和epel源

~]# mv /etc/yum.repos.d/* /tmp

~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

~]# curl -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

(8)时区与时间同步

~]# ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

~]# yum install dnf ntpdate -y

~]# dnf makecache

~]# ntpdate ntp.aliyun.com

2.2、安装docker

(1)添加docker软件yum源

~]# curl -o /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

~]# cat /etc/yum.repos.d/docker-ce.repo

[docker-ce-stable]

name=Docker CE Stable - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/stable

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

.......

(2)安装docker-ce

列出所有可以安装的版本

~]# dnf list docker-ce --showduplicates

docker-ce.x86_64 3:18.09.6-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.7-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.8-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.9-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.0-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.1-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.2-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.3-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.4-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.5-3.el7 docker-ce-stable

.....

这里我们安装最新版本的docker,所有的节点都需要安装docker服务

~]# dnf install -y docker-ce docker-ce-cli

(3)启动docker并设置开机自启动

~]# systemctl enable --now docker

查看版本号,检测docker是否安装成功

~]# docker --version

Docker version 19.03.12, build 48a66213fea

上面的这种查看docker client的版本的。建议使用下面这种方法查看docker-ce版本号,这种方法把docker的client端和server端的版本号查看的一清二楚。

~]# docker version

Client:

Version: 19.03.12

API version: 1.40

Go version: go1.13.10

Git commit: 039a7df9ba

Built: Wed Sep 4 16:51:21 2019

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.12

API version: 1.40 (minimum version 1.12)

Go version: go1.13.10

Git commit: 039a7df

Built: Wed Sep 4 16:22:32 2019

OS/Arch: linux/amd64

Experimental: false

(4)更换docker的镜像仓库源

国内镜像仓库源有很多,比如阿里云,清华源,中国科技大,docker官方中国源等等。

~]# cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://f1bhsuge.mirror.aliyuncs.com"]

}

EOF

由于加载docker仓库源,所以需要重启docker

~]# systemctl restart docker

2.3、安装kubernetes服务

(1)添加kubernetes软件yum源

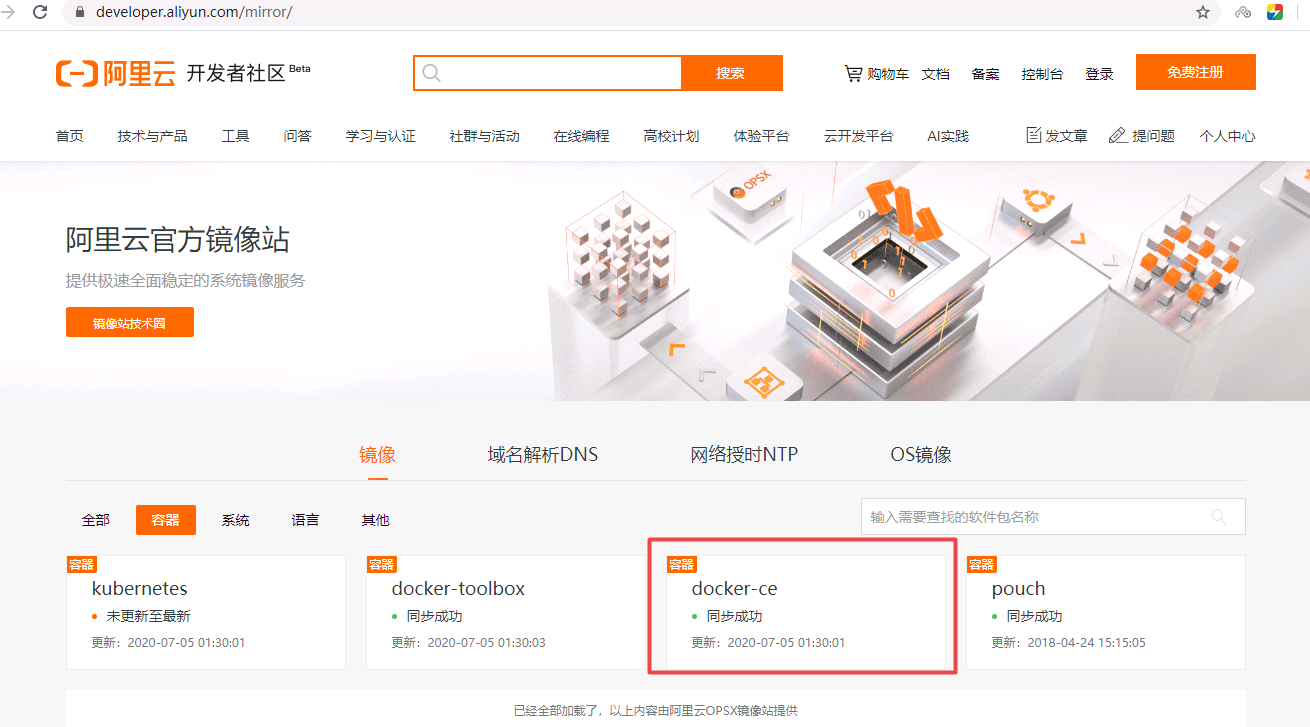

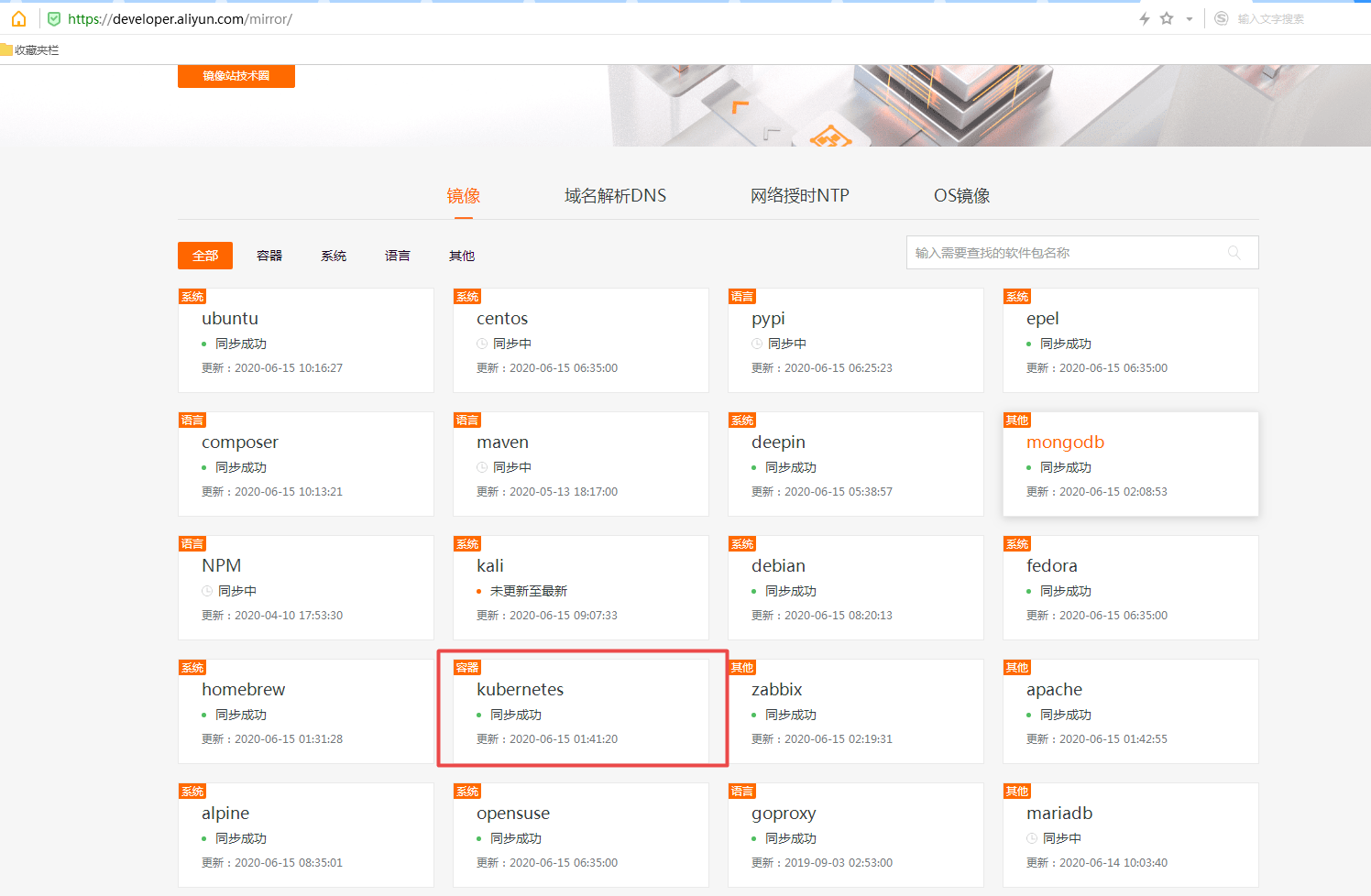

方法:浏览器打开mirrors.aliyun.com网站,找到kubernetes,即可看到镜像仓库源

~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

(2)安装kubeadm、kubelet和kubectl组件

所有的节点都需要安装这几个组件。

~]# dnf list kubeadm --showduplicates

kubeadm.x86_64 1.17.7-0 kubernetes

kubeadm.x86_64 1.17.7-1 kubernetes

kubeadm.x86_64 1.17.8-0 kubernetes

kubeadm.x86_64 1.17.9-0 kubernetes

kubeadm.x86_64 1.18.0-0 kubernetes

kubeadm.x86_64 1.18.1-0 kubernetes

kubeadm.x86_64 1.18.2-0 kubernetes

kubeadm.x86_64 1.18.3-0 kubernetes

kubeadm.x86_64 1.18.4-0 kubernetes

kubeadm.x86_64 1.18.4-1 kubernetes

kubeadm.x86_64 1.18.5-0 kubernetes

kubeadm.x86_64 1.18.6-0 kubernetes

由于kubernetes版本变更非常快,因此这里先列出了有哪些版本,我们安装1.18.6版本。所有节点都安装。

~]# dnf install -y kubelet-1.18.6 kubeadm-1.18.6 kubectl-1.18.6

(3)设置开机自启动

我们先设置开机自启,但是

kubelete服务暂时先不启动。

~]# systemctl enable kubelet

2.4、部署Kubeadm Master节点

(1)生成预处理文件

在master节点执行如下指令,可能出现WARNING警告,但是不影响部署:

~]# kubeadm config print init-defaults > kubeadm-init.yaml

这个文件kubeadm-init.yaml,是我们初始化使用的文件,里面大概修改这几项参数。

[root@master1 ~]# cat kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.50.128

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master1

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers #阿里云的镜像站点

kind: ClusterConfiguration

kubernetesVersion: v1.18.3 #kubernetes版本号

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12 #选择默认即可,当然也可以自定义CIDR

podSubnet: 10.244.0.0/16 #添加pod网段

scheduler: {}

(2)提前拉取镜像

如果直接采用kubeadm init来初始化,中间会有系统自动拉取镜像的这一步骤,这是比较慢的,我建议分开来做,所以这里就先提前拉取镜像。在master节点操作如下指令:

[root@master ~]# kubeadm config images pull --config kubeadm-init.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.18.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.18.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.18.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.18.0

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.1

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.4.3-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:1.6.5

如果大家看到开头的两行warning信息(我这里没有打印),不必担心,这只是警告,不影响我们完成实验。

既然镜像已经拉取成功了,那我们就可以直接开始初始化了。

(3)初始化kubenetes的master节点

执行如下命令:

[root@master ~]# kubeadm init --config kubeadm-init.yaml

[init] Using Kubernetes version: v1.18.3

[preflight] Running pre-flight checks

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.50.128]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [master localhost] and IPs [192.168.50.128 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [master localhost] and IPs [192.168.50.128 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W0629 21:47:51.709568 39444 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

W0629 21:47:51.711376 39444 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 14.003225 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.50.128:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:05b84c41152f72ca33afe39a7ef7fa359eec3d3ed654c2692b665e2c4810af3e

这个过程大概15s的时间就做完了,之所以初始化的这么快就是因为我们提前拉取了镜像。

像我上面这样的没有报错信息,并且显示最后的kubeadm join 192.168.50.128:6443 --token abcdef.0123456789abcdef这些,说明我们的master是初始化成功的。

当然我们还需要按照最后的提示在使用kubernetes集群之前还需要再做一下收尾工作,注意是在master节点上执行的。

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

好了,此时的master节点就算是初始化完毕了。有个重要的点就是最后一行信息,这是node节点加入kubernetes集群的认证命令。这个密钥是系统根据sha256算法计算出来的,必须持有这样的密钥才可以加入当前的kubernetes集群。

如果此时查看当前集群的节点,会发现只有master节点自己。

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master NotReady master 2m53s v1.18.6

接下来我们把node节点加入到kubernetes集群中

2.5、node节点加入kubernetes集群中

先把加入集群的命令明确一下,此命令是master节点初始化成功之后给出的命令。

注意,你的初始化之后与我的密钥指令肯定是不一样的,因此要用自己的命令才行,我这边是为了给大家演示才贴出来的。

~]# kubeadm join 192.168.50.128:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:05b84c41152f72ca33afe39a7ef7fa359eec3d3ed654c2692b665e2c4810af3e

(1)node1节点加入集群

[root@node1 ~]# kubeadm join 192.168.50.128:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:05b84c41152f72ca33afe39a7ef7fa359eec3d3ed654c2692b665e2c4810af3e

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.17" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster

当看到This node has joined the cluster,这一行信息表示node节点加入集群成功,

(2)node2节点加入集群

node2节点也是使用同样的方法来执行。所有的节点加入集群之后,此时我们可以在master节点执行如下命令查看此集群的现有节点。

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 2m53s v1.18.6

node1 NotReady 73s v1.18.6

node2 NotReady 7s v1.18.6

可以看到集群的三个节点都已经存在,但是现在还不能用,也就是说集群节点是不可用的,原因在于上面的第2个字段,我们看到三个节点都是NotReady状态,这是因为我们还没有安装网络插件,这里我们选择使用flannel插件。

2.6、安装Flannel网络插件

Flannel是 CoreOS 团队针对 Kubernetes 设计的一个覆盖网络(Overlay Network)工具,其目的在于帮助每一个使用 Kuberentes 的 CoreOS 主机拥有一个完整的子网。这次的分享内容将从Flannel的介绍、工作原理及安装和配置三方面来介绍这个工具的使用方法。

Flannel通过给每台宿主机分配一个子网的方式为容器提供虚拟网络,它基于Linux TUN/TAP,使用UDP封装IP包来创建overlay网络,并借助etcd维护网络的分配情况

(1)默认方法

默认大家从网上的教程都会使用这个命令来初始化。

~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

事实上很多用户都不能成功,因为国内网络受限,所以可以这样子来做。

(2)更换flannel镜像源

修改本地的hosts文件添加如下内容以便解析才能下载该文件

199.232.28.133 raw.githubusercontent.com

然后下载flannel文件

[root@master ~]# curl -o kube-flannel.yml https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

编辑镜像源,默认的镜像地址我们修改一下。把yaml文件中所有的quay.io 修改为quay-mirror.qiniu.com

[root@master ~]# sed -i 's/quay.io/quay-mirror.qiniu.com/g' kube-flannel.yml

此时保存保存退出。在master节点执行此命令。

[root@master ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

这样子就可以成功拉取flannel镜像了。当然你也可以使用我提供给大家的kube-flannel.yml文件。

- 查看

kube-flannel的pod是否运行正常

[root@master ~]# kubectl get pod -n kube-system | grep kube-flannel

kube-flannel-ds-amd64-8svs6 1/1 Running 0 44s

kube-flannel-ds-amd64-k5k4k 0/1 Running 0 44s

kube-flannel-ds-amd64-mwbwp 0/1 Running 0 44s

(3)无法拉取镜像解决方法

像上面查看kube-flannel的pod时发现不是Running,这就表示该pod有问题,我们需要进一步分析。

执行kubectl describe pod xxxx如果有以下报错:

Normal BackOff 24m (x6 over 26m) kubelet, master3 Back-off pulling image "quay-mirror.qiniu.com/coreos/flannel:v0.12.0-amd64"

Warning Failed 11m (x64 over 26m) kubelet, master3 Error: ImagePullBackOff

或者是

Error response from daemon: Get https://quay.io/v2/: net/http: TLS handshake timeout

上面的这些都表示是网络问题不能拉取镜像,我这里给大家提前准备了flannel的镜像。导入一下就可以了。

[root@master ~]# docker load -i flannel.tar

2.7、验证节点是否可用

稍等片刻,执行如下指令查看节点是否可用

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 82m v1.17.6

node1 Ready 60m v1.17.6

node2 Ready 55m v1.17.6

目前节点状态是Ready,表示集群节点现在是可用的。

3、测试kubernetes集群

3.1、kubernetes集群测试

(1)创建一个nginx的pod

现在我们在kubernetes集群中创建一个nginx的pod,验证是否能正常运行。

在master节点执行一下步骤:

[root@master ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

[root@master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

现在我们查看pod和service

[root@master ~]# kubectl get pod,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-86c57db685-kk755 1/1 Running 0 29m 10.244.1.10 node1

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 443/TCP 24h

service/nginx NodePort 10.96.5.205 80:32627/TCP 29m app=nginx

打印的结果中,前半部分是pod相关信息,后半部分是service相关信息。我们看service/nginx这一行可以看出service暴漏给集群的端口是32627。记住这个端口。

然后从pod的详细信息可以看出此时pod在node1节点之上。node1节点的IP地址是192.168.50.129

(2)访问nginx验证集群

那现在我们访问一下。打开浏览器(建议火狐浏览器),访问地址就